Beyond the Productivity Boost: My Evolving AI Collaboration Playbook

Published on June 30, 2025

The AI Transformation in My Workflow

In my previous article, I shared how integrating Google Gemini and Code Assist into my development workflow delivered an immediate, dramatic productivity boost. That initial foray into AI-assisted programming allowed me to build the Bug Hunter Toolkit in just three weeks – a truly transformative experience.

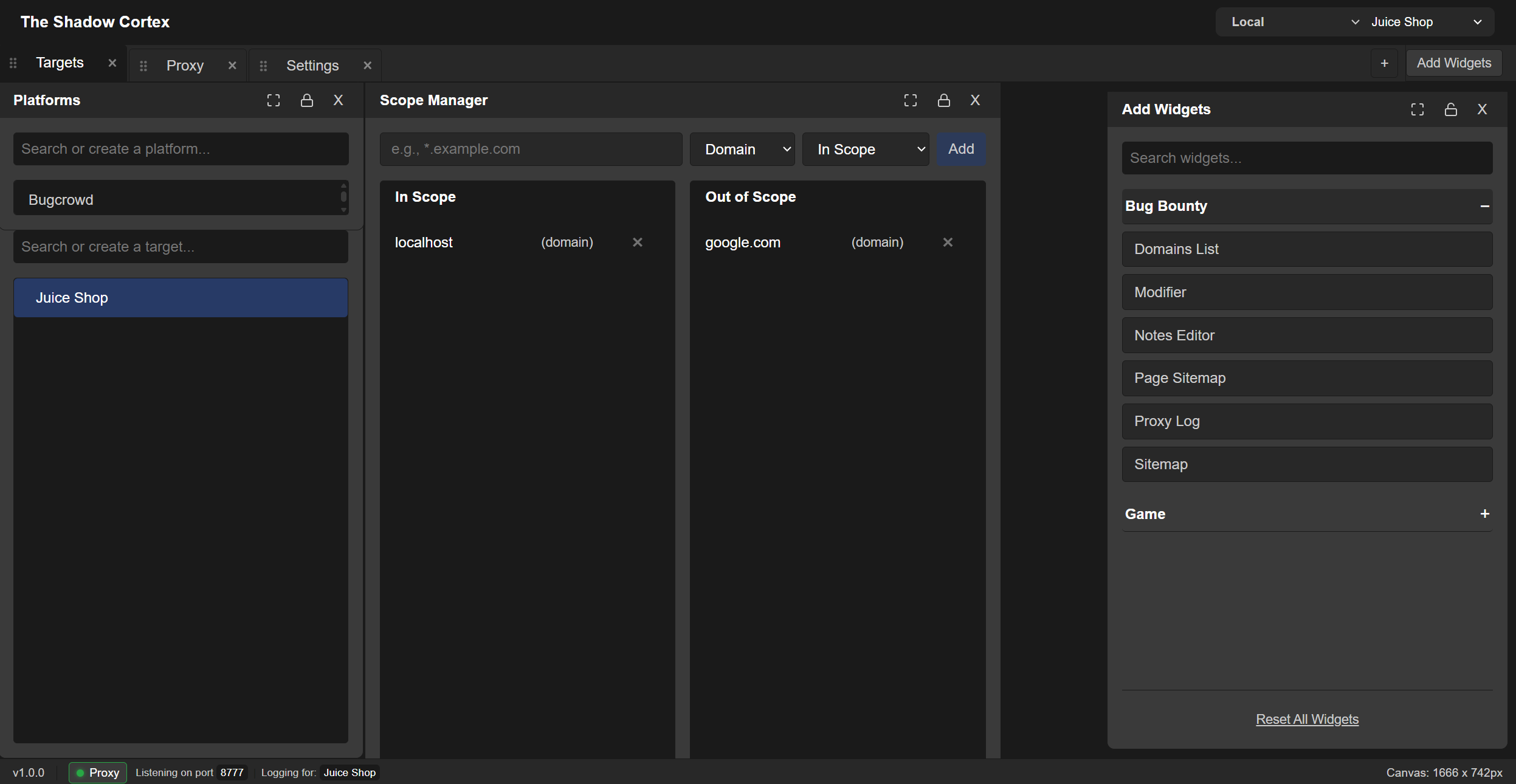

However, as I continue my journey and delve into more complex projects, like my current ambitious endeavor, The Shadow Cortex (TSC), designed to be an even more robust and comprehensive security platform, I’m discovering something crucial: simply having powerful AI tools isn’t enough. True mastery in this new era of development lies in refining my own mindset and learning how to approach each AI tool individually.

Adapting to AI Personalities

It’s much like my past experience as a manager in application security. Just as an effective manager adapts their style to each unique direct report – understanding their strengths, weaknesses, and how best to communicate with them – I’m learning to adapt my interaction style to the distinct “personalities” and capabilities of different AI tools. Gemini’s chat interface, with its broader context and conversational flow, requires a different approach than Code Assist, which operates with a more limited, file-specific awareness.

A Strategic Two-Phase Approach

This realization is leading me to develop a more strategic, two-phase process for tackling complex features. In this post, I want to pull back the curtain on this evolving playbook.

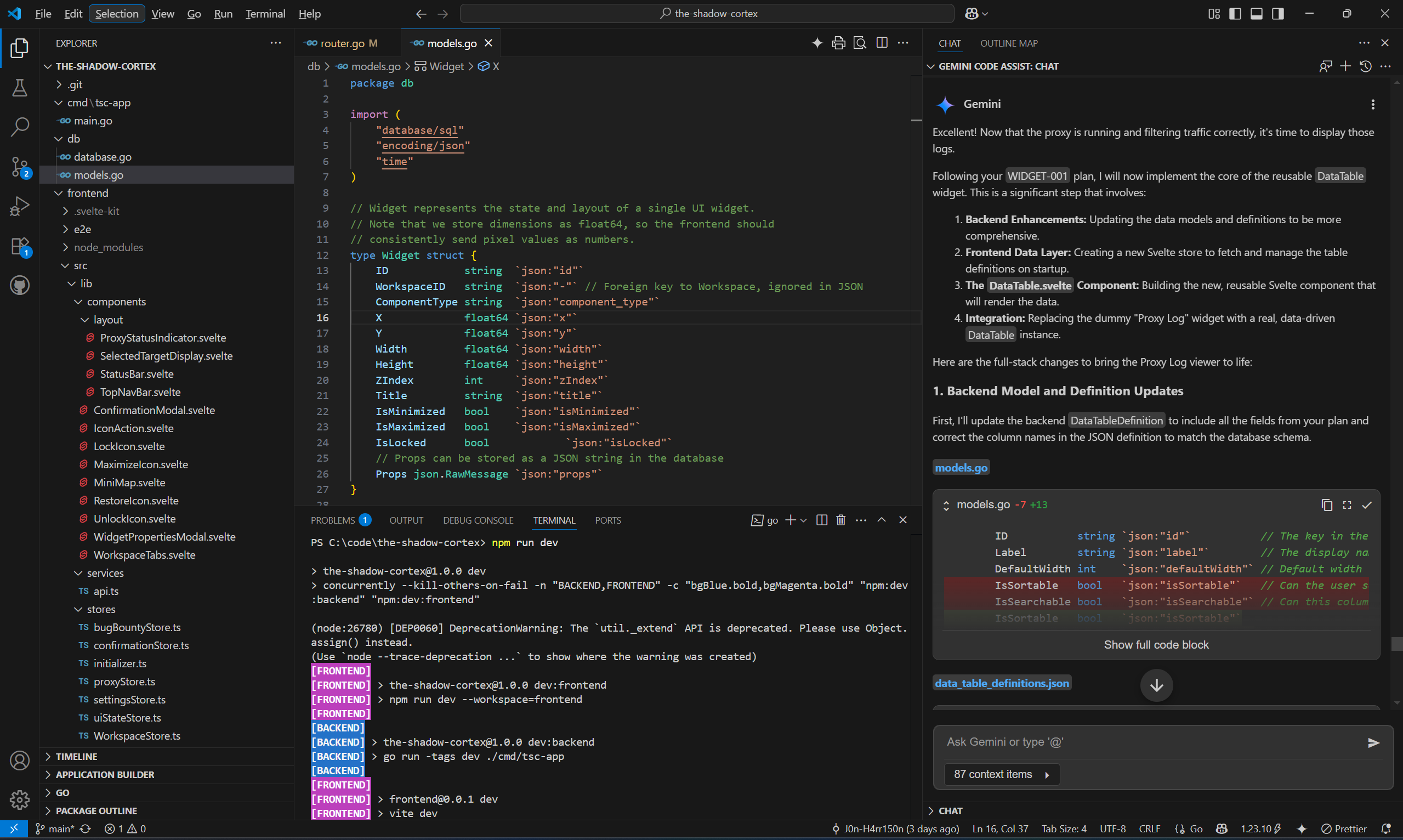

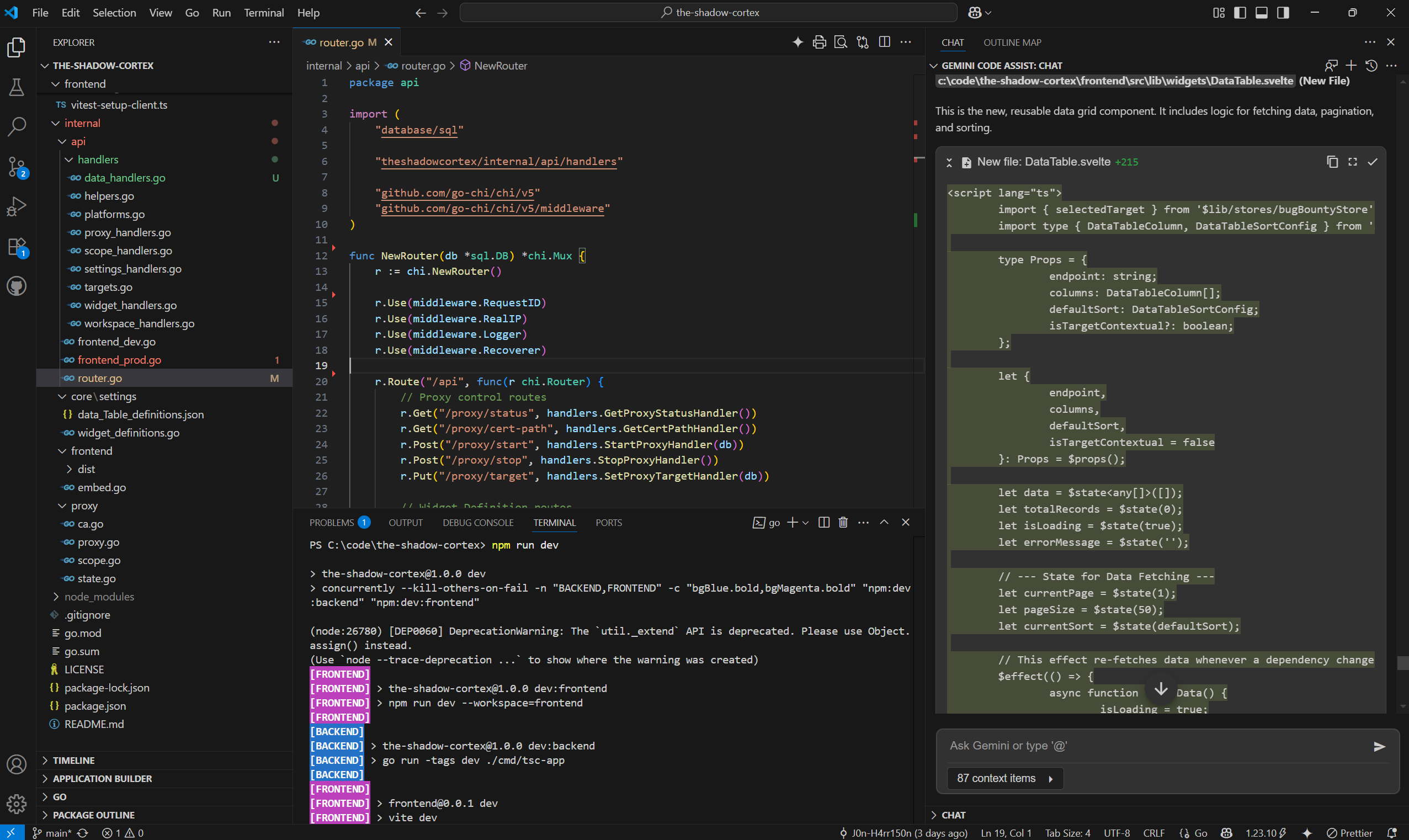

First, I’ll show how I’m now leveraging Gemini AI in a chat for high-level project management and brainstorming. This phase transforms nebulous ideas into a detailed, executable plan. Second, I’ll demonstrate how that meticulously crafted plan then becomes the blueprint for effective, precise code generation using Gemini Code Assist in my IDE, even with its narrower context window. It’s a synergistic dance between conceptualization and execution, and it’s enabling me to build features like the Data Grid for TSC with unprecedented clarity and efficiency.

Navigating the Hurdles of AI Collaboration

The Adventure of “Programming Adventures”

Despite the undeniable productivity boost, my “programming adventures” with Gemini were far from a seamless, magical experience. The journey was filled with unexpected turns.

Addressing Context Loss

One of the most significant hurdles I encountered was Gemini’s tendency to lose context during longer chat sessions. It felt like, after a certain number of turns or once the conversation became sufficiently complex, the model would start to “forget” earlier details or requirements, leading to irrelevant or even contradictory suggestions. This meant I couldn’t just keep asking follow-up questions indefinitely; I had to find ways to periodically “reset” the context or condense prior information.

Overcoming Code Assist Misunderstandings

Compounding this was the behavior of Google Code Assist. While powerful for boilerplate and quick suggestions, it sometimes seemed to misunderstand my intent entirely. This led to code snippets that were syntactically correct but logically flawed, or simply didn’t fit the existing codebase. There were moments where it felt like Code Assist was actively “messing things up,” and I’d spend time debugging its suggestions rather than leveraging them for speed.

Developing a “Prompt Engineering” Intuition

These challenges weren’t roadblocks; they were learning opportunities. I quickly realized that working with an AI like Gemini wasn’t about simply dictating tasks. It was about developing a new kind of “prompt engineering” intuition – a dance between clarity, conciseness, and strategic re-contextualization. I had to learn the best approaches to break down complex problems into smaller, digestible prompts, provide explicit examples, and actively verify every piece of code it generated. This iterative process, this constant “trust but verify” mindset, became fundamental to my workflow.

Phase 1: Gemini Chat as My Project Manager & Brainstorming Partner

My process for defining a new, complex feature like The Data Grid for The Shadow Cortex (TSC) begins not with writing code, but with deep dives into Gemini’s chat interface. I treat Gemini as an incredibly knowledgeable, tireless project manager and brainstorming partner. We start with the high-level concept, and through a series of iterative prompts and probing questions from both sides, we refine the idea, identify dependencies, and eventually break it down into a highly granular, file-by-file implementation plan.

This is where the power of Gemini’s broader context window in chat truly shines. It can hold the entire feature in mind as we discuss architectural patterns, data flow, security considerations, and UI/UX implications. The output of such a session is not just a vague idea, but a complete roadmap.

Below is the exact, detailed implementation plan for WIDGET-001: The Data Grid that I generated with Gemini chat, which then served as my precise guide for coding with Gemini Code Assist. I’m sharing it here so you can see the level of detail that works effectively for this two-phase approach.

[BEGIN WIDGET-001: THE DATA GRID - DETAILED IMPLEMENTATION PLAN]

Feature: WIDGET-001: The Data Grid - Detailed Implementation Plan

Context for Gemini Code Assist: This plan outlines the implementation of The Data Grid, a core feature for The Shadow Cortex (TSC) (Go backend, SvelteKit frontend). This widget will be a highly versatile and reusable data table component capable of displaying any tabular data dynamically, with advanced filtering, sorting, pagination, and search capabilities.

Goal: To create a single, powerful Svelte component (DataTable.svelte) that can serve as the display for all tabular data throughout TSC (e.g., Proxy Logs, Domains, Findings, Game Challenge Attempts), fully backed by server-side processing for performance.

Key Programming Concepts Across Files:

Go Backend: HTTP handler logic, database interactions, custom query parsing, struct definitions, data aggregation for pagination/filtering.

SvelteKit Frontend: Svelte components (.svelte), TypeScript logic (.ts), Svelte stores (writable), reactive statements ($: ), API fetching, event handling, component props, CSS for styling.

Detailed File-by-File Breakdown:

1. Go Backend Modifications

File: internal/db/models.go

Purpose: Define new Go structs that represent the data models for DataTableDefinition and its sub-components, used by the backend and sent to the frontend.

Programming Concepts/Content:

W001T001: Define DataTableDefinition struct.

W001T002: Define DataTableColumn struct (for column configuration).

W001T003: Define DataTableSortConfig struct (for default sorting).

W001T004: Add any other helper structs needed for table configuration (e.g., DataTableFilterConfig if distinct).

Ensure all fields have json:"fieldName" tags.

File: internal/core/data_query_parser/parser.go (NEW FILE)

Purpose: Implement the custom search query parser that translates the frontend's specific search syntax into SQL WHERE clauses.

Programming Concepts/Content:

W001T005: Define ParseCustomQuery(query string, allowedColumns []string) (string, []interface{}, error) function.

This function takes the raw search string (e.g., "req.body:userinput res.statuscode=403") and a list of columns allowed for search (security check).

It will use string parsing, regex, or a simple state machine to break down the query.

It translates keywords like req.body into database column names (e.g., request_body).

It interprets operators (:, =) and boolean logic (AND, OR).

It returns a SQL WHERE clause fragment (e.g., "request_body LIKE ? AND status_code = ?") and a slice of corresponding interface{} arguments (to be used with parameterized queries).

Crucial: Implement robust input validation and parameterization to prevent SQL injection.

Relevant Tasks: W001T005.

File: internal/api/handlers/data_handlers.go

Purpose: Implement the "Universal Data API" endpoint that serves paginated, sorted, filtered, and searched data for any table.

Programming Concepts/Content:

W001T006: Implement func (h *DataHandlers) GetData(w http.ResponseWriter, r *http.Request) handler for GET /api/data/{tableName}.

Extract tableName from URL params. Validate tableName against a whitelist of allowed tables for security!

Extract page, pageSize, sort, order, filter (JSON string), search_query (your custom syntax), context_id, context_column from query parameters. Parse them into appropriate Go types.

Build the base SELECT * FROM {tableName} and SELECT COUNT(*) FROM {tableName} queries.

Apply Contextual Filtering: If context_id and context_column are provided, and tableName is defined as IsTargetContextual in its DataTableDefinition, add WHERE {context_column} = ?. (Requires lookup of DataTableDefinition).

Apply Standard Filters: Parse filter JSON into WHERE clauses.

Apply Custom Search: Call data_query_parser.ParseCustomQuery and add its returned WHERE clause and arguments.

Apply Sorting: Add ORDER BY clause.

Apply Pagination: Add LIMIT and OFFSET clauses ((page-1)*pageSize).

Execute both the COUNT(*) query (with all filters/search) and the data query.

Return data and total_records in JSON: { "data": [...], "total_records": N }.

Relevant Tasks: W001T006.

File: internal/api/handlers/export_handlers.go (NEW FILE)

Purpose: Implement API endpoints for exporting data in various formats.

Programming Concepts/Content:

W001T007: Implement handlers for GET /api/export/{tableName}.{format} (e.g., .txt, .csv, .json).

These handlers receive the same filter/sort/search parameters as GetData.

Call the same underlying database query logic as GetData but without LIMIT and OFFSET.

Format the retrieved data using Go's encoding/csv, encoding/json, or simple string concatenation for .txt.

Set appropriate HTTP headers (Content-Disposition, Content-Type) to trigger file download in the browser.

Relevant Tasks: W001T007.

File: internal/core/settings/widget_definitions.go (NEW FILE)

Purpose: Store the predefined configurations (DataTableDefinition) for DataTable widgets.

Programming Concepts/Content:

W001T008: Define DataTableDefinition struct (as described in our previous discussion).

W001T009: Define DataTableColumn and DataTableSortConfig structs.

W001T010: Store a list of these definitions.

Recommendation: Embed them as JSON files within the Go binary (embed.FS) for simplicity initially.

Example: data_table_definitions.json (listing all proxy_logs_viewer, domains_list, findings_viewer, js_analysis_findings, modifier_history etc. definitions).

W001T011: Implement a function GetDataTableDefinitions() ([]DataTableDefinition, error) to load these from embedded files.

Relevant Tasks: W001T008, W001T009, W001T010, W001T011.

File: internal/api/handlers/widget_handlers.go (NEW FILE)

Purpose: Provide API endpoint for frontend to fetch DataTableDefinitions.

Programming Concepts/Content:

W001T012: Implement func (h *WidgetHandlers) GetDataTableDefinitions(w http.ResponseWriter, r *http.Request) handler for GET /api/widgets/data-table-definitions.

Calls settings.GetDataTableDefinitions() and returns the list as JSON.

Relevant Tasks: W001T012.

2. SvelteKit Frontend Modifications

File: src/lib/types/api.ts (MODIFY - or auto-generate via APP-006)

Purpose: Define TypeScript interfaces for the new data models.

Programming Concepts/Content:

W001T013: Add TypeScript interfaces for DataTableDefinition, DataTableColumn, DataTableSortConfig, and any other related types defined in Go.

File: src/lib/stores/initializer.ts (MODIFY)

Purpose: Fetch DataTableDefinitions on application startup.

Programming Concepts/Content:

W001T014: In initializeAllStores(), add fetchDataTableDefinitions() to the Promise.allSettled block.

File: src/lib/stores/dataTableStore.ts (NEW FILE)

Purpose: A Svelte store to hold the fetched DataTableDefinitions.

Programming Concepts/Content:

W01T015: Define export const dataTableDefinitions = writable<DataTableDefinition[]>([]);.

Define export async function fetchDataTableDefinitions(fetch: typeof window.fetch). This function will call GET /api/widgets/data-table-definitions and populate the store.

File: src/lib/widgets/DataTable.svelte (NEW FILE)

Purpose: The core reusable component for displaying tabular data.

Programming Concepts/Content:

W001T016: Define props:

TypeScript

export let endpoint: string; // e.g., "/api/data/proxy_logs"

export let columns: DataTableColumn[];

export let defaultSort?: DataTableSortConfig;

export let defaultFilters?: Record<string, any>; // For initial filtering

export let customSearchEnabled: boolean = false;

export let exportBaseUrl?: string; // e.g., "/api/export/proxy_logs"

export let exportTxtColumn?: string; // For .txt exports

export let isTargetContextual: boolean = false; // Does this data filter by target/platform?

export let contextColumnName: string = 'target_id'; // Which column in the DB

export let showTargetFilterToggle: boolean = true; // Show the "Show All Targets" toggle

W001T017: Internal State: currentPage, pageSize, currentSort, currentFilters, currentSearchQuery, data, totalRecords, isLoading.

W001T018: Data Fetching (fetchData()):

Constructs URL with all parameters (page, pageSize, sort, order, filter, search_query, context_id, context_column).

context_id/context_column from subscription to selectedPlatformId/selectedTargetId (only if isTargetContextual).

Calls endpoint, updates data and totalRecords.

W001T019: UI Controls:

Render dynamic headers from columns prop (clickable for sorting).

Pagination controls (currentPage, pageSize dropdown, Prev/Next buttons).

External search input for customSearchQuery.

"Show All Targets" Toggle: Renders only if showTargetFilterToggle is true.

Dynamic Column Filters (Header-based): Placeholder for future implementation (will need API for filter options).

W001T020: Export Buttons: Buttons for .txt, .csv, .json exports, building URL with current filters/sort/search and exportBaseUrl.

W001T021: User-Resizable Columns (via interact.js): Implement interact.js logic within column headers to allow resizing columns. Save updated widths (emit to parent, then save to WidgetLayout.props).

W001T022: Display Cell Content: Basic rendering of row[col.id].

Relevant Tasks: W001T016 to W001T022.

File: frontend/src/lib/widgets/WorkspaceManager.svelte (MODIFY)

Purpose: To instantiate DataTable widgets using their definitions.

Programming Concepts/Content:

W001T023: Modify componentMap to include 'DataTable': DataTable.

W001T024: Update addWidget function:

When adding a DataTable widget, it will receive a DataTableDefinition (e.g., proxy_logs_viewer).

The addWidget function (likely in workspaceStore.ts) creates the WidgetLayout and copies the DataTableDefinition's relevant fields into the WidgetLayout.props for that specific DataTable instance.

Relevant Tasks: W001T023, W001T024.

This provides a complete, file-by-file roadmap for building WIDGET-001: The Data Grid for The Shadow Cortex!

[END WIDGET-001: THE DATA GRID - DETAILED IMPLEMENTATION PLAN]

Phase 2: Executing with Precision using Gemini Code Assist (IDE)

Now that I have this incredibly detailed roadmap from my Gemini chat “project manager,” the shift to implementation with Gemini Code Assist becomes remarkably efficient. Code Assist, working directly within my IDE, has a much narrower contextual understanding – it primarily sees the file I’m currently working on and related imports. This is precisely why the upfront planning with Gemini chat is so critical.

The Workflow: Plan to Code

With the comprehensive plan from Phase 1 as my guide, I can now approach Code Assist with highly focused, self-contained tasks. This dramatically streamlines the coding process:

- For

internal/db/models.go(W001T001-W001T004): I can simply provide Code Assist with the exact struct definitions needed, includingjsontags, and it will generate the boilerplate quickly and accurately. This saves me from manually typing out repetitive Go struct fields and ensures consistency.

- For

internal/core/data_query_parser/parser.go(W01T005): I can feed it the specific function signature and the detailed logic for parsing, knowing that the “how-to-parse” has already been brainstormed in depth with the chat interface. I can then focus on verifying its output and testing for edge cases, rather than spending time on the initial implementation.

- For

src/lib/widgets/DataTable.svelte(W001T016-W001T022): I can provide the precise props, internal state, and even the high-level logic for data fetching and UI controls from the plan. Code Assist then handles the Svelte syntax, reactivity ($:statements), and basic component structure, allowing me to focus on the intricate UI interactions and styling.

The Synergistic Power

This clear division of labor transforms the development process. Gemini chat handles the complex, multi-file, architectural thinking and detailed task breakdown. Gemini Code Assist then acts as a highly efficient, in-IDE assistant for writing the actual code, file by file, based on those granular instructions. This synergy allows me to leverage each AI tool for its distinct strengths, leading to far fewer errors, less “context loss” frustration, and a truly accelerated development cycle even for intricate features like the Data Grid.

Looking Ahead: Continued Evolution

The progress on The Shadow Cortex, enabled by this evolving AI collaboration playbook, is exhilarating. Building a system of this complexity, with its deep integration needs and advanced functionalities, would typically take significantly longer. By strategically employing Gemini chat for planning and Code Assist for execution, I’m able to maintain momentum and clarity.

This Data Grid feature is just one example of how I’m applying this process. As TSC continues to grow, integrating more complex modules for advanced security testing and analysis, I anticipate refining this collaborative methodology even further. The journey of programming with AI isn’t just about the tools; it’s about continuously learning how to best partner with them to transform complex ideas into robust, functional software.